Hello,

I am running the latest 2.1.0 version of ShinyProxy in kubernetes mode. I have been noticing the main shinyproxy pod using an increasing amount of memory. Even when the app pods are terminated, the shinyproxy pod holds on to the memory. This lead to our shinyproxy pod reaching our memory limit and restarting itself.

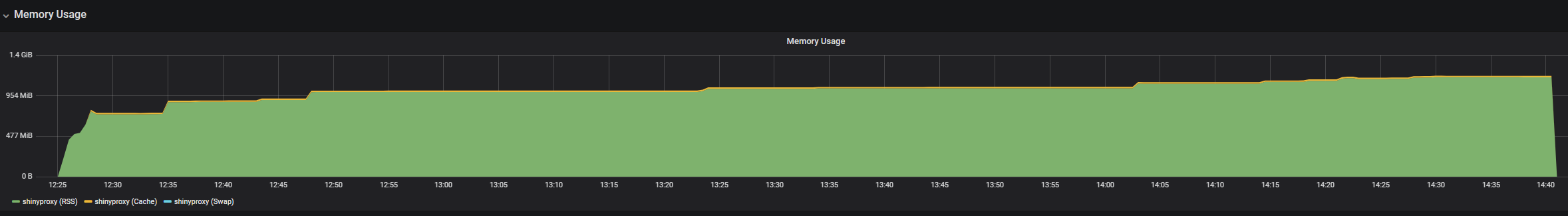

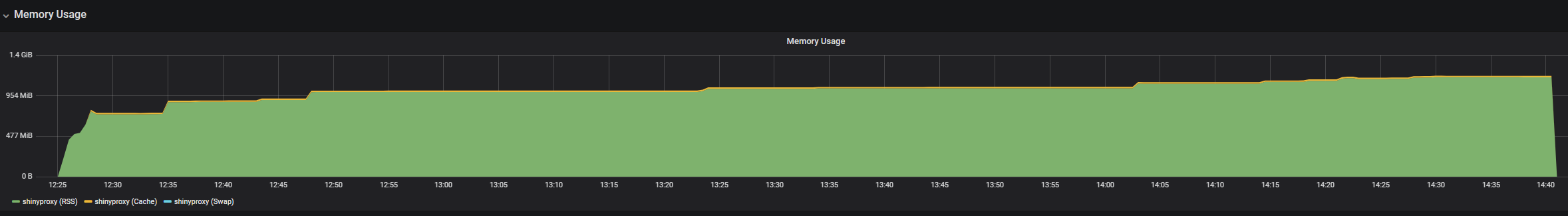

I was able to get a snapshot of memory usage in my dev environment. There was only ever two app pods running (by me). I would spin up two different apps, let them load, close the tab, wait for the pods to terminate, and do it all again. It covers almost 2.5 hours of memory usage. I would expect the memory to drop once applications are closed.

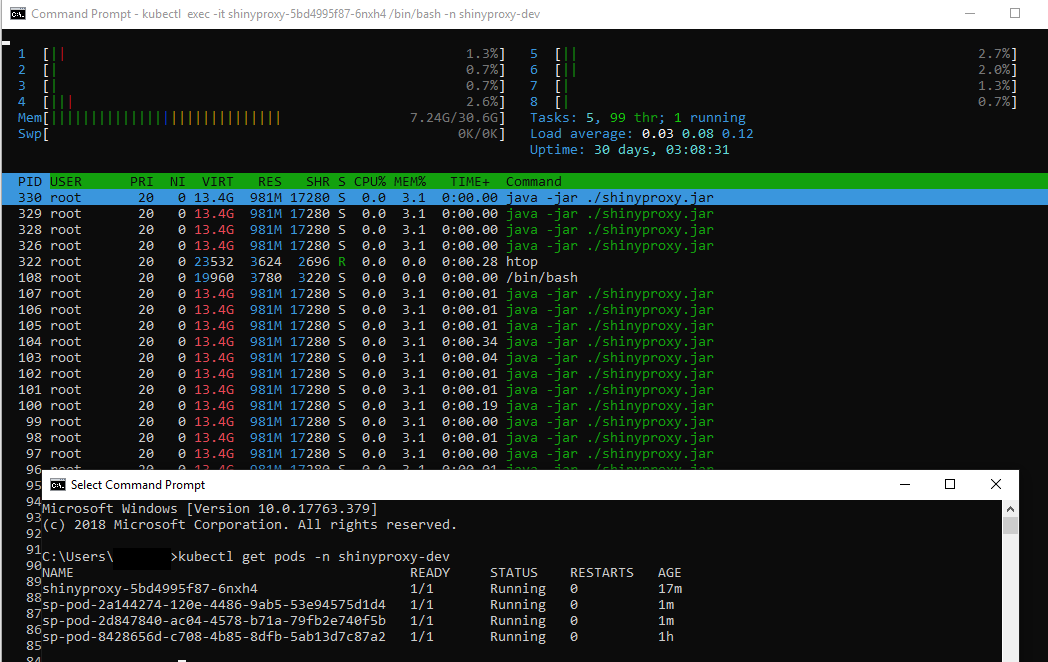

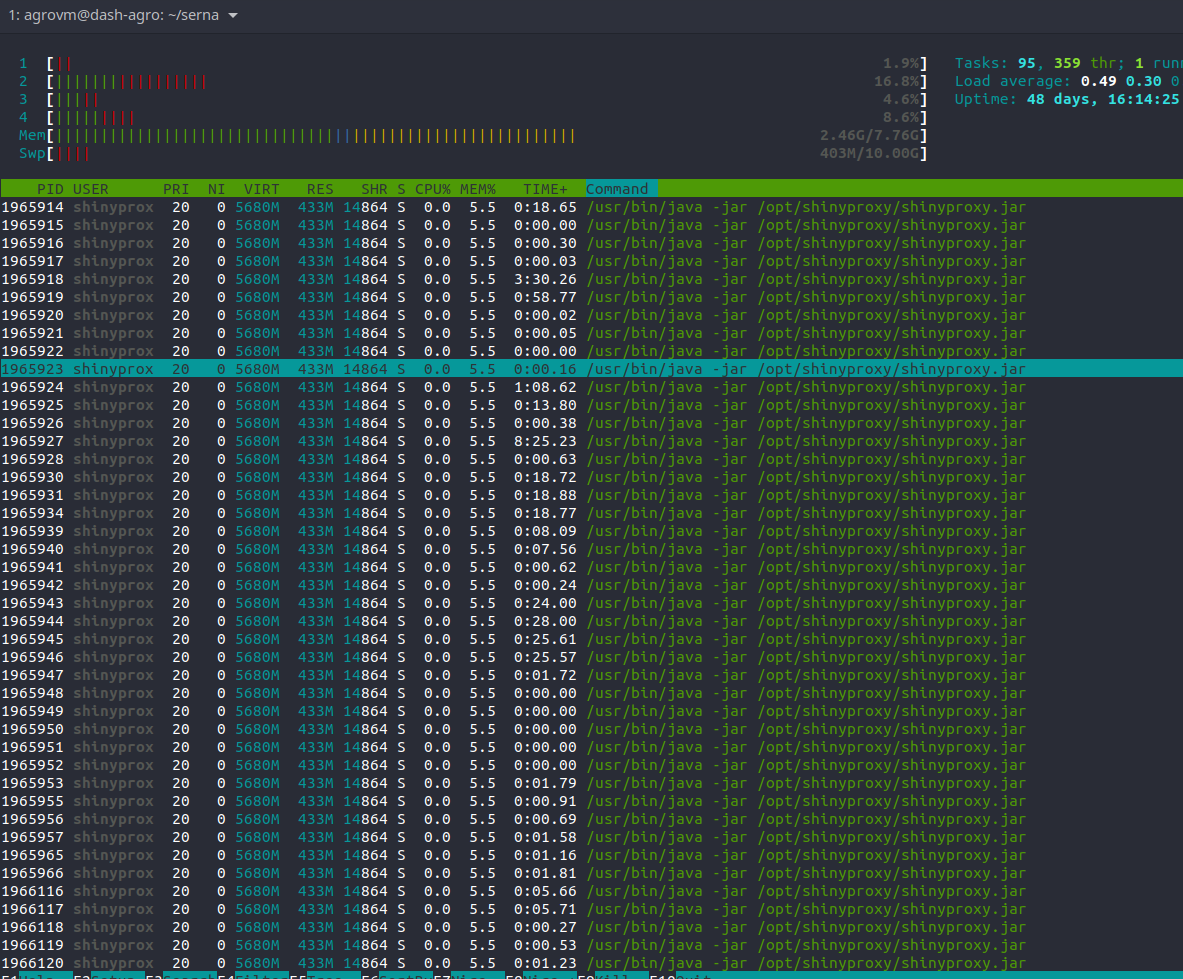

When I ran htop on the shinyproxy pod, I noticed a lot of shinyproxy threads. Is this normal? Each shiny app that created a pod got added as a thread and was never removed, even when the app was closed and the pod was terminated.

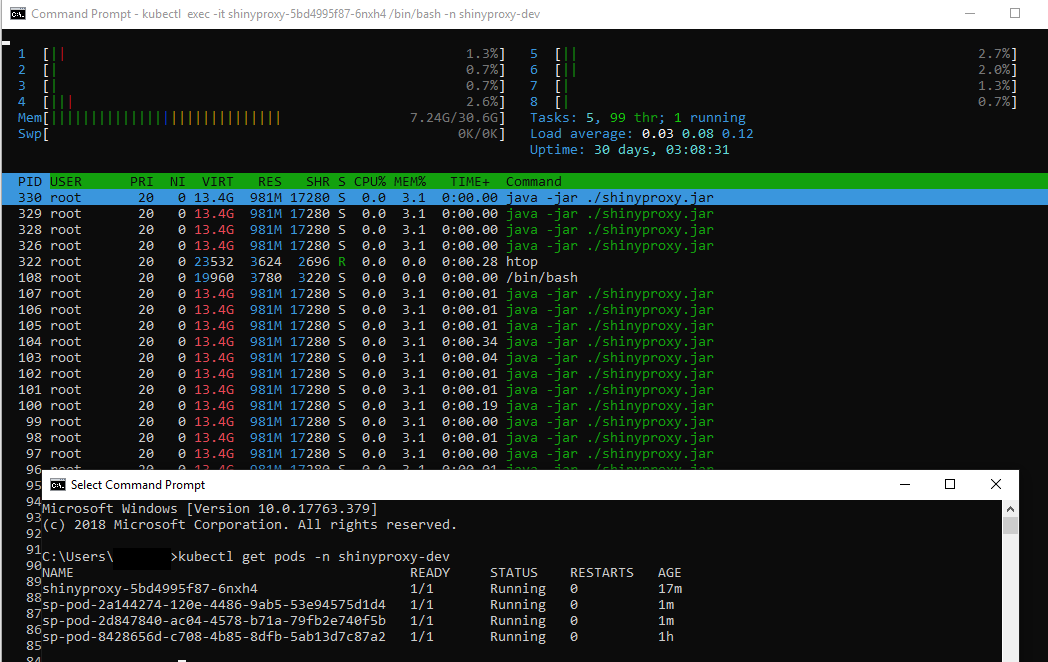

With pods starting up/running:

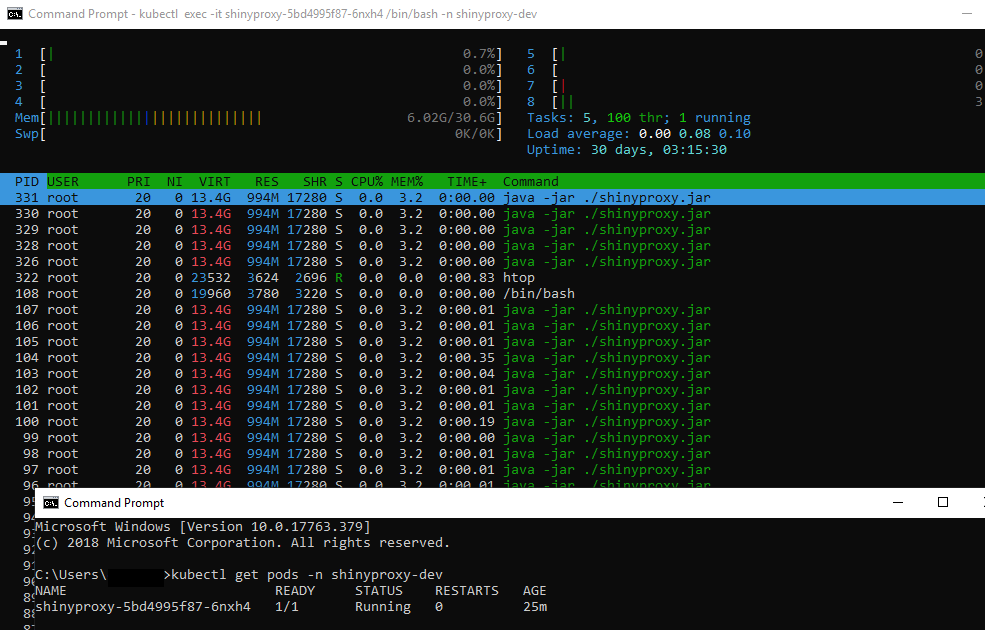

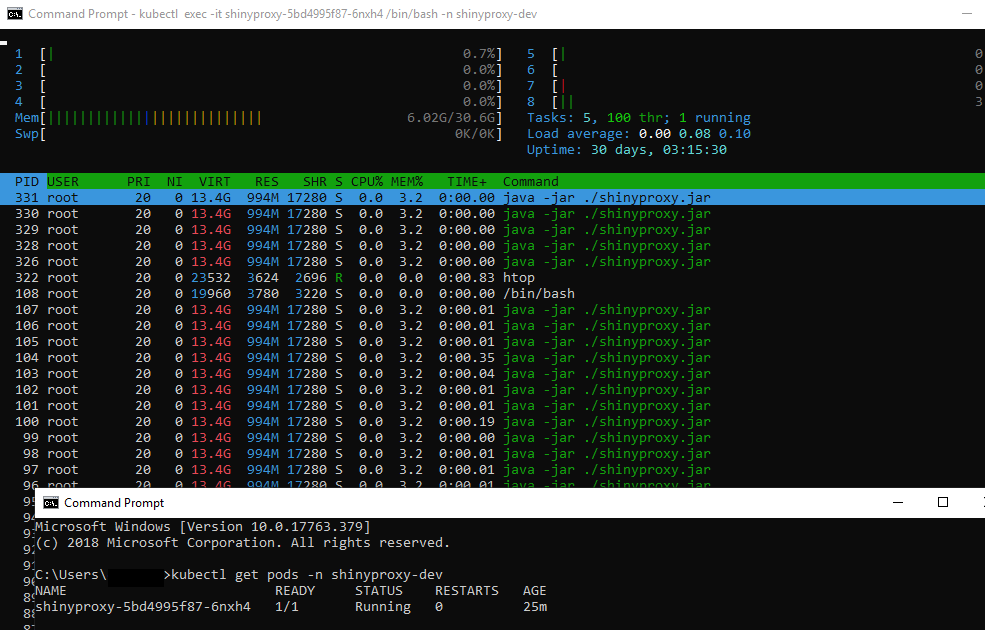

After closing all windows/tabs running shiny applications:

Should shinyproxy be running with a larger request and memory limit? Our dev environment has a limit of 2GB for the shinyproxy pod. Are all these threads sticking around normal behavior? Does java take a while to clean up memory?

Thanks,

Michael Calamera

1 Like

Hi @MichaelCal,

In your screenshots I see a large number of processes, each running java -jar .shinyproxy.jar.

This is indeed not the expected behaviour; only 1 such process should be expected. I would expect any subsequent launches to fail because the HTTP port is already in use by the first launch.

Can you provide more details about the configuration of your shinyproxy pod?

1 Like

Hi @fmichielssen,

When the shinyproxy pod first comes up we see all these threads. Then as we launch each shiny app, we see more threads being created. It is one process.

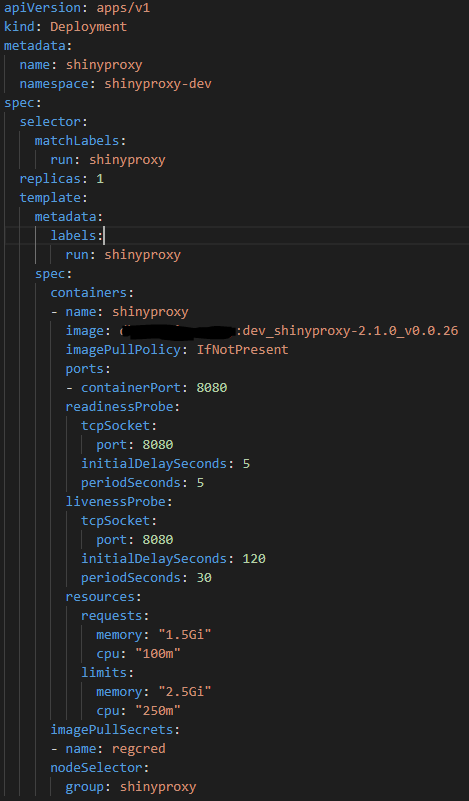

Here is a snapshot of our deployment yaml for the main shinyproxy pod:

Here is our dockerfile used to build the shinyproxy deployment pod:

FROM openjdk:8-jre

RUN mkdir -p /opt/shinyproxy/

RUN wget https://www.shinyproxy.io/downloads/shinyproxy-2.1.0.jar -O /opt/shinyproxy/shinyproxy.jar

COPY application.yml /opt/shinyproxy/application.yml

COPY dbrs_logo.png /opt/shinyproxy/www/dbrs_logo.png

COPY start_shiny_proxy.sh /opt/shinyproxy/start_shiny_proxy.sh

RUN mkdir /etc/certs/

COPY cert.pem /etc/certs/cert.pem

WORKDIR /opt/shinyproxy/

CMD ./start_shiny_proxy.sh

Where start_shiny_proxy.sh is:

set -e

sed -i "s/{SHINY_APP1_VER}/${SHINY_APP1_VER-NA}/" application.yml

sed -i "s/{SHINY_APP2_VER}/${SHINY_APP2_VER-NA}/" application.yml

java -jar ./shinyproxy.jar

(This is to avoid having to build a new shinyproxy image every time we update one of the underlying shiny apps. Instead, we replace the docker image values in application.yaml before starting the process.)

Ah, my bad, I’m not familiar with the htop program.

Some clarification about thread and memory usage by Shinyproxy:

-

All requests are handled by an embedded HTTP server called Undertow, which uses XNIO worker threads to deal with requests in a (potentially) async manner. As a consequence, you may see the thread count quickly climb as more XNIO workers are spawned. But at some point, this number should stabilize as the worker pools max out.

-

Memory is managed in the typical Java way: chunks are allocated from the heap, and only released during garbage collection runs. However, these released chunks are just returned to the Java heap, so on the OS level you won’t see this as freed up memory.

So for both thread count and memory usage: a steady increase does not necessarily indicate a problem, but it might.

If your pod runs out of memory, there could be an issue with the Java heap size being too big with regards to the available RAM. You can specify a heap limit with the Xmx argument:

java -Xmx1200m -jar ./shinyproxy.jar

Hi @fmichielssen

I have a similar issue but I have deploy with the .deb version in normal mode.

How do I reduce the number of process of Java in this matter??

Best regard