I’m testing the shinyproxy3.0.2 with redis, keycloak, here I run 3 shinyproxy instances on 3 different machines, and use nginx reverse proxy for redirecting requests to different backend servers.

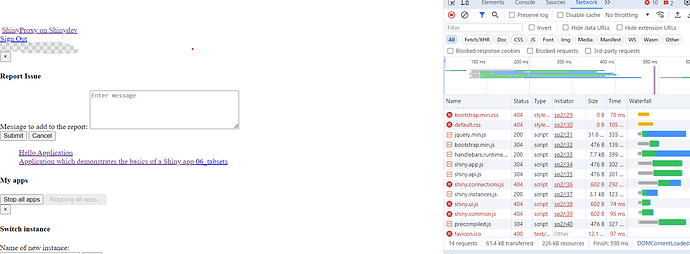

When I use ip_hash for upstream server, everything works fine; when using round-bin or least-connected load balance methods, as the attached snapshot showing, the shinyproxy pages can’t be loaded, and apps can’t be launched because some js and css files can’t be loaded.

Could you advise how to avoid this issue when using round-bin or least-connected load balance methods ? Thanks in advance.

Here is the shinyproxy settings:

logging:

file:

shinyproxy.log

level:

root: DEBUG

#root: INFO

management:

metrics:

export:

prometheus:

enabled: true

server:

forward-headers-strategy: native

frame-options: sameorigin

secure-cookies: true

contextPath: /sp/

servlet:

context-path: /sp/

spring:

session:

store-type: redis

redis:

host: 192.168.121.12

password: redis-stack

proxy:

title: ShinyProxy on Shinydev

landingPage: /

my-apps-mode: Inline

port: 3080

useForwardHeaders: true

#hide-navbar: true

heartbeat-rate: 10000

heartbeat-timeout: 120000

container-wait-time: 60000

admin-groups: ADMIN

authentication: keycloak

keycloak:

auth-server-url: https://shinyproxy.example.com

realm: shinyproxy

resource: xxxxxxxxxxxxxxxxxxxxx

credentials-secret: xxxxxxxxxxxxxxxxx

ssl-required: external

name-attribute: email

#authentication: simple

usage-stats-url: micrometer

docker:

internal-networking: true

container-log-path: /logs/container

work-directory: /shinyproxy

specs:

- id: 01_hello

display-name: Hello Application

description: Application which demonstrates the basics of a Shiny app

container-cmd: ["R", "-e", "shinyproxy::run_01_hello()"]

container-image: openanalytics/shinyproxy-demo

container-network: shinyproxy

access-group: [TESTER,USER]

- id: 06_tabsets

container-cmd: ["R", "-e", "shinyproxy::run_06_tabsets()"]

container-image: openanalytics/shinyproxy-demo

container-network: shinyproxy

access-group: [TESTER,DEVELOPER]

Here is the nginx settings:

upstream shinyproxy { least_conn; #ip_hash; server 192.168.121.228:3080; # node01 server 192.168.121.223:3080; # node02 }location /sp/ {

proxy_pass http://shinyproxy;

expires 1h;proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; proxy_read_timeout 600s; proxy_redirect off; proxy_set_header Host $http_host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; }